SPONSOR: Datametrex AI Limited (TSX-V: DM) A revenue generating small cap A.I. company that NATO and Canadian Defence are using to fight fake news & social media threats. The company announced three $1M contacts in Q3-2019. Click here for more info.

The Rise of Deepfakes

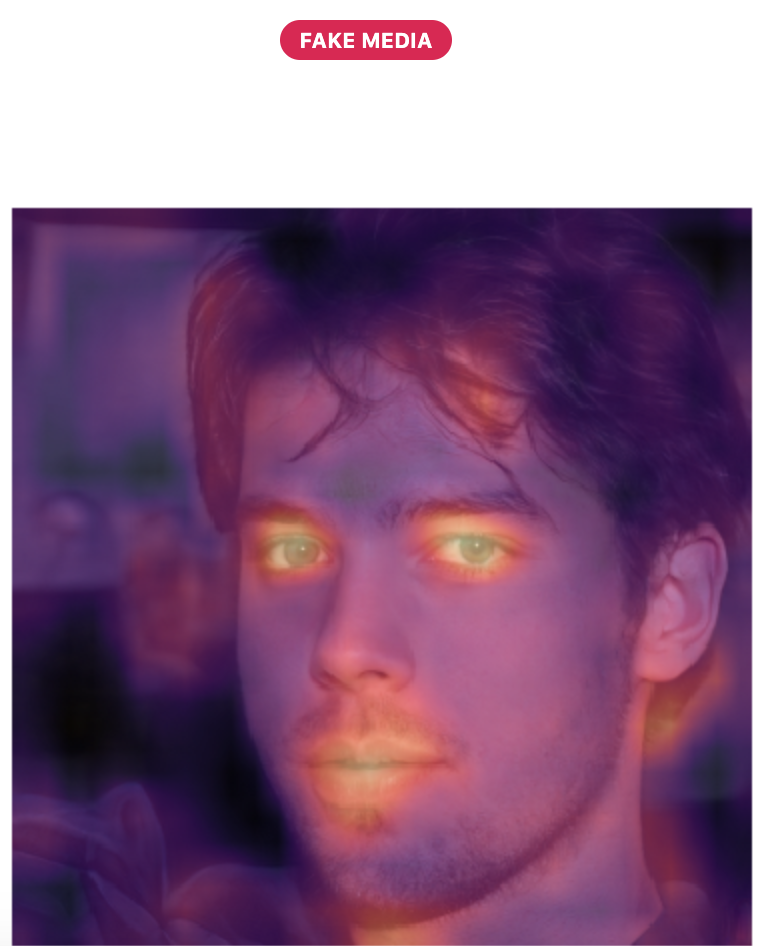

- Deepfakes are synthetic media in which a person in an existing image or video is replaced with someone else’s likeness

- In recent months videos of influential celebrities and politicians have surfaced displaying a false and augmented reality of one’s believes or gestures

Deepfakes leverage powerful techniques from machine learning and artificial intelligence to manipulate and generate visual and audio content with a high potential to deceive. The purpose of this article is to enhance and promote efforts into research and development and not to promote or aid in the creation of nefarious content.

Introduction

Deepfakes are synthetic media in which a person in an existing image or video is replaced with someone else’s likeness. In recent months videos of influential celebrities and politicians have surfaced displaying a false and augmented reality of one’s believes or gestures.

Whilst deep learning has been successfully applied to solve various complex problems ranging from big data analytics to that of computer vision the need to control the content generated is crucial alongside that of it’s availability to the public.

Within recent months, a number of mitigation mechanisms have been proposed and cited with the use of Neural Networks and Artificial Intelligence being at the heart of them. From this, we can distinguish that a proposal for technologies that can automatically detect and assess the integrity of visual media is therefore indispensable and in great need if we wish to fight back against adversarial attacks. (Nguyen, 2019)

Early 2017

Deepfakes as we know them first started to gain attention in December 2017, after Vice’s Samantha Cole published an article on Motherboard.

The article talks about the manipulation of celebrity faces to recreate famous scenes and how this technology can be misused for blackmail and illicit purposes.

The videos were significant because they marked the first notable instance of a single person who was able to easily and quickly create high-quality and convincing deepfakes.

Cole goes on to highlight the juxtaposition in society as these tools are made free by corporations for students to gain sufficient knowledge and key skills to enhance their general studies at University and school.

Open-source machine learning tools like TensorFlow, which Google makes freely available to researchers, graduate students, and anyone with an interest in machine learning. — Samantha Cole

Whilst deepfakes have the potential to differ in general quality from previous efforts of superimposing faces onto other bodies. A good deepfake, created by Artificial Intelligence that has been trained on hours of high-quality footage creates such extremely high-quality content humans struggle to understand whether it is real or not. In turn, researches have shown interest in developing neural networks to help understand the accuracy of such videos. From this, they are able to then distinguish them as fake.

In general, a good deepfake can be found where the insertions around the mouth are seamless alongside having smooth head movements and appropriate coloration to surroundings. Gone have the days of simply superimposing a head onto a body and animating it by hand as the erroneous is still noticeable leading to dead context and mismatches.

Early 2018

In January 2018, a proprietary desktop application called FakeApp was launched. This app allows users to easily create and share videos with their faces swapped with each other. As of 2019, FakeApp has been superseded by open-source alternatives such as Faceswap and the command line-based DeepFaceLab. (Nguyen, 2019)

With the availability of this technology being so high, websites such as GitHub have sprung to life in offering new mythologies of combatting such attacks. Within the paper ‘Using Capsule Networks To Detect Forged Images and Videos’ Huy goes on to talk about the ability to use forged images and videos to bypass facial authentication systems in secure environments.

The quality of manipulated images and videos has seen significant improvement with the development of advanced network architectures and the use of large amounts of training data that previously wasn’t available.

Later 2018

Platforms such as Reddit start to ban deepfakes after fake news and videos that started circling from specific communities on their site. Reddit took it on themself to delete these communities in a stride to protect their own.

A few days later BuzzFeed publishes a frighteningly realistic video that went viral. The video showed Barack Obama in a deepfake. Unlike the University of Washington video, Obama was made to say words that weren’t his own, in turn helping to raise light to this technology.

Below is a video BuzzFeed created with Jordan Peele as part of a campaign to raise awareness of this software.

Early 2019

In the last year, several manipulated videos of politicians and other high-profile individuals have gone viral, highlighting the continued dangers of deepfakes, and forcing large platforms to take a position.

Following BuzzFeed’s disturbingly realistic Obama deepfake, instances of manipulated videos of other high-profile subjects began to go viral, and seemingly fool millions of people online.

Despite most of the videos being even more crude than deepfakes — using rudimentary film editing rather than AI — the videos sparked sustained concern about the power of deepfakes and other forms of video manipulation while forcing technology companies to take a stance on what to do with such content. (Business Insider, 2019).

Source: https://medium.com/swlh/the-rise-of-deepfakes-19972498487a