- Entered into an agreement on April 13, 2020 securing the rights to import the iONEBIO INC’s iLAMP Novel-CoV19 Detection Kit (real-time Reverse Transcription LAMP-PCR assay system) into Canada

- Under the terms of this agreement, Datametrex was also given rights to sell the tests into other countries around the globe, including the United States

- iONEBIO Inc. claims their test kits provide results within approximately 15 to 20 minutes with 99.9% accuracy

TORONTO, April 16, 2020 — Datametrex AI Limited (the “Company†or “Datametrexâ€) is pleased to announce that entered into an agreement on April 13, 2020 securing the rights to import the iONEBIO INC’s iLAMP Novel-CoV19 Detection Kit (real-time Reverse Transcription LAMP-PCR assay system) into Canada, Under the terms of this agreement, Datametrex was also given rights to sell the tests into other countries around the globe, including the United States. Datametrex has developed strong relationships with many large multi-national companies in South Korea.  As a result of these relationships, the Canadian Embassy in Seoul contacted Datametrex to ask for help in procuring rapid test kits.

The COVID-19 test kits are manufactured by iONEBIO INC. in South Korea, and are the same test kits that have been successfully used in South Korea in their “drive-through†testing stations. This kit has also been used to test every traveller entering into South Korea and those testing positive for Covid-19 were immediately isolated. Datametrex believes a key factor that allowed South Korea to slow the spread of COVID-19 was its ability to swiftly identify and quarantine those infected by testing millions of people using these test kits These test kits were first approved for use by the Korean Ministry of Food and Drug Safety on August 30, 2019. In addition, an independent study was conducted by DankukUniversity (DKU) in Seoul, South Korea, and it was completed and approved on April 2, 2020.

Health Canada must approve these COVID-19 test kits before they can be used in Canada. The Company is currently working with Health Canada to have the approval of these kits fast tracked. These test kits are currently in use in some European and Asian countries outside of South Korea. Approval work has commenced with the FDA in the United States to obtain FDA approval and to authorize the tests under the Emergency Use Authorization Program run by US Center for Disease Control (CDC).

iONEBIO Inc. claims their test kits provide results within approximately 15 to 20 minutes with 99.9% accuracy. Each kit contains 288 individual tests, all of which can be completed in one hour. By utilizing these kits, screening stations can be set up almost anywhere and will allow for the early detection and swift quarantine of infected persons, which is believed to be a key factor in slowing the spread of the novel coronavirus. Early detection using rapid tests will also provide further protection to Canada’s front-line workers, especially health care professionals.

South Korea has used this kit to test millions of its citizens and, as of April 14, 2020, there have been 10,654 cases of Covid-19 and 222 deaths reported. In North America, where the use of a rapid test has not been implemented on a large scale, there have been, as at April 14, 2020, 25,680 cases and 780 deaths in Canada and 588,465 cases and 23,711 death in the US. (All data collected by Datametrex’s Covid-19 dashboard, which is available at http://www.datametrex.com/covid-board.html)

“We strongly believe these kits will assist Canada in slowing the spread of Covid-19 and ultimately save lives. It’s incredibly rewarding for us to be able to help Canada combat the spread of COVID-19,†said Andrew Ryu, Chairman of the Company.

If the test kits are approved by Health Canada and the Canadian government purchases these test kits as expected, the Canadian government will be required to pay the kits in advance. Datametrex expects that all of its costs and expenses related to the import of these tests will be satisfied out of the purchase price for the tests paid for by the Canadian government.

The Company is not making any express or implied claims that it has the ability to treat the Covid-19 virus at this time.

About Datametrex AI Limited

Datametrex AI Limited is a technology-focused company with exposure to Artificial Intelligence and Machine Learning through its wholly-owned subsidiary, Nexalogy (www.nexalogy.com).

Additional information on Datametrex is available at www.datametrex.com

For further information, please contact:

Marshall Gunter – CEO

Phone: (514) 295-2300

Email: [email protected]

Jeff Stevens- Co-Founder

Phone: (647) 400-8494

Email: [email protected]

Neither the TSX Venture Exchange nor it’s Regulation Services Provider (as that term is defined in the policies of the TSX Venture Exchange) accepts responsibility for the adequacy or accuracy of this release.

Forward-Looking Statements

This news release contains “forward-looking information” within the meaning of applicable securities laws. All statements contained herein that are not clearly historical in nature may constitute forward-looking information. In some cases, forward-looking information can be identified by words or phrases such as “may”, “will”, “expect”, “likely”, “should”, “would”, “plan”, “anticipate”, “intend”, “potential”, “proposed”, “estimate”, “believe” or the negative of these terms, or other similar words, expressions and grammatical variations thereof, or statements that certain events or conditions “may” or “will” happen, or by discussions of strategy.

Readers are cautioned to consider these and other factors, uncertainties and potential events carefully and not to put undue reliance on forward-looking information. The forward-looking information contained herein is made as of the date of this press release and is based on the beliefs, estimates, expectations, and opinions of management on the date such forward-looking information is made. The Company undertakes no obligation to update or revise any forward-looking information, whether as a result of new information, estimates or opinions, future events or results or otherwise or to explain any material difference between subsequent actual events and such forward-looking information, except as required by applicable law.

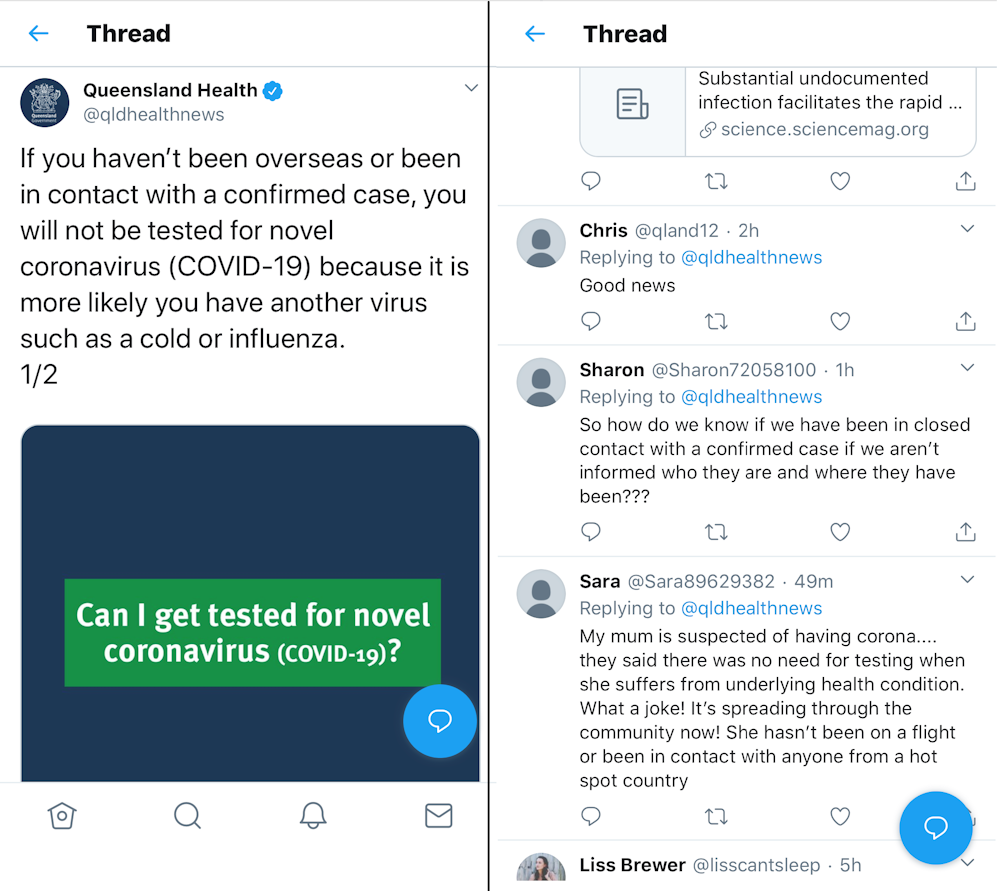

The official tweet from Queensland Health and the bots’ responses.

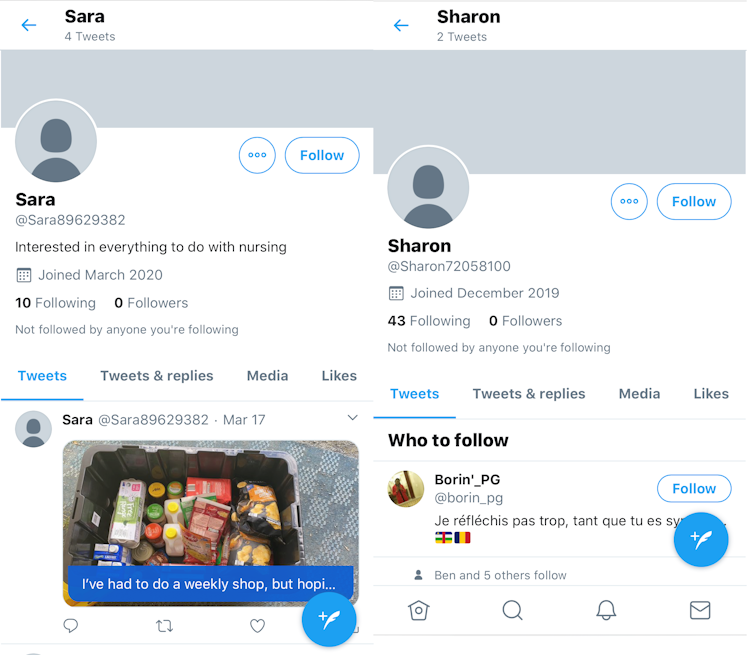

The official tweet from Queensland Health and the bots’ responses. Screenshots of the accounts of ‘Sharon’ and ‘Sara’.

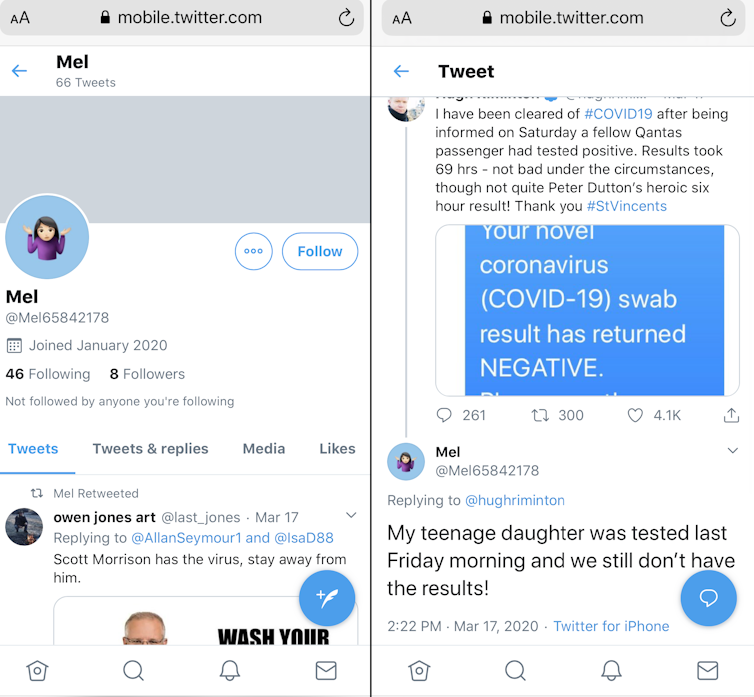

Screenshots of the accounts of ‘Sharon’ and ‘Sara’. Bot ‘Mel’ spread false information about a possible delay in COVID-19 results, and retweeted hateful messages.

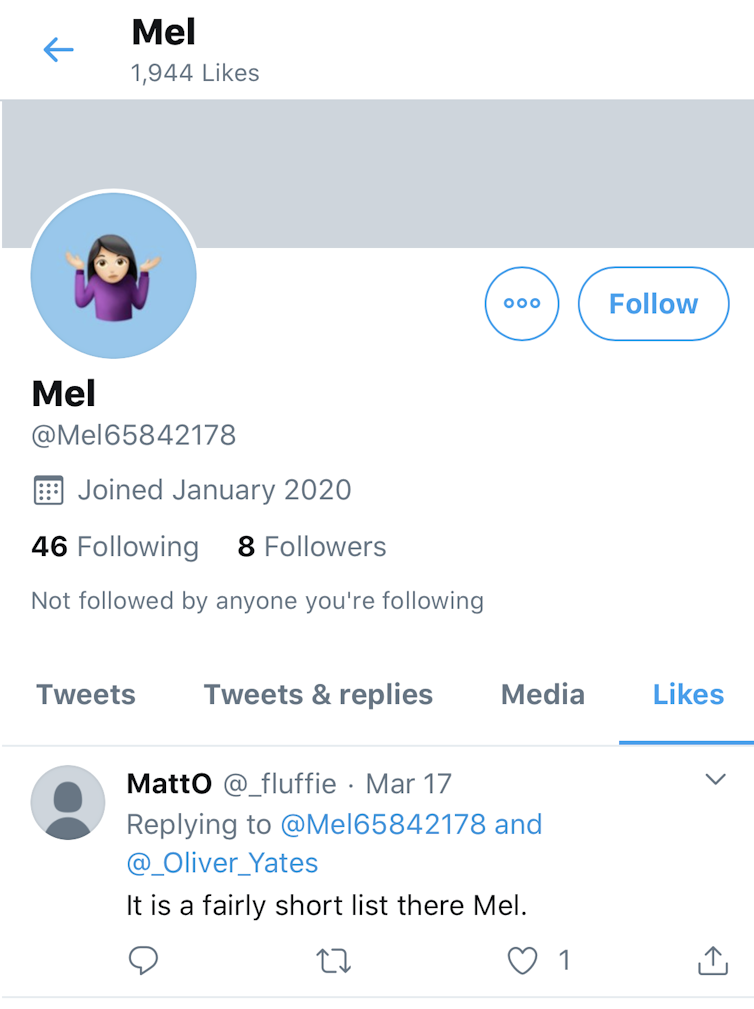

Bot ‘Mel’ spread false information about a possible delay in COVID-19 results, and retweeted hateful messages. An account that seemed to belong to a real Twitter user began engaging with ‘Mel’.

An account that seemed to belong to a real Twitter user began engaging with ‘Mel’.