- Established a join venture (JV) with LOTTE to co-bid on contracts with the Ministry of Health in South Korea

- This is to improve accuracy and transparency in the middle of COVID-19 catastrophe on media, social media, and various other data sources

TORONTO, April 06, 2020 — Datametrex AI Limited (the “Company†or “Datametrexâ€) is pleased to announce that it has established a join venture (JV) with LOTTE to co-bid on contracts with the Ministry of Health in South Korea. This is to improve accuracy and transparency in the middle of COVID-19 catastrophe on media, social media, and various other data sources.

On April 2, 2020, Datametrex announced that it was awarded preferred vendor status with LOTTE. One of the many benefits of being a preferred vendor is the opportunity to create JV’s and co-bid on contracts with LOTTE’s support. Datametrex and LOTTE recently agreed to create a JV using its AI and other relevant technologies to improve the accuracy and transparency of data which includes ability to filter disinformation for the Ministry of Health Department in South Korea. This opportunity was initiated due to the Coronavirus and COVID-19 catastrophe. South Korea’s health department has been the leader in screening and flattening the curve and is a role model for other countries on how to manage the COVID-19 pandemic.

The Company was successful in pitching the opportunity and create a JV with LOTTE largely due to the work it has done for the US Federal Government on COVID-19 and Coronavirus. Datametrex released a report on April 1, 2020 which clearly identified Chinese authorities manipulating social media surrounding Coronavirus and COVID-19. Their intent was to sway public opinion to negatively impact the United States Government and President Trump while positively impacting China and President Xi.

Please click the link below to view the report:

“I am thrilled to share this update with shareholders, our team has done a fantastic job fast tracking with LOTTE. The work we have done this past year training our technology in various languages such as Korean, Chinese, French, and Russian is really starting to pay off,†says Marshall Gunter, CEO of the Company

About Datametrex AI Limited

Datametrex AI Limited is a technology focused company with exposure to Artificial Intelligence and Machine Learning through its wholly owned subsidiary, Nexalogy (www.nexalogy.com).

Additional information on Datametrex is available at: www.datametrex.com

For further information, please contact:

Marshall Gunter – CEO

Phone: (514) 295-2300

Email: [email protected]

Jeff Stevens- Co-Founder

Phone: (647) 400-8494

Email: [email protected]

Neither the TSX Venture Exchange nor its Regulation Services Provider (as that term is defined in the policies of the TSX Venture Exchange) accepts responsibility for the adequacy or accuracy of this release.

Forward-Looking Statements

This news release contains “forward-looking information” within the meaning of applicable securities laws. All statements contained herein that are not clearly historical in nature may constitute forward-looking information. In some cases, forward-looking information can be identified by words or phrases such as “may”, “will”, “expect”, “likely”, “should”, “would”, “plan”, “anticipate”, “intend”, “potential”, “proposed”, “estimate”, “believe” or the negative of these terms, or other similar words, expressions and grammatical variations thereof, or statements that certain events or conditions “may” or “will” happen, or by discussions of strategy.

Readers are cautioned to consider these and other factors, uncertainties and potential events carefully and not to put undue reliance on forward-looking information. The forward-looking information contained herein is made as of the date of this press release and is based on the beliefs, estimates, expectations and opinions of management on the date such forward-looking information is made. The Company undertakes no obligation to update or revise any forward-looking information, whether as a result of new information, estimates or opinions, future events or results or otherwise or to explain any material difference between subsequent actual events and such forward-looking information, except as required by applicable law.

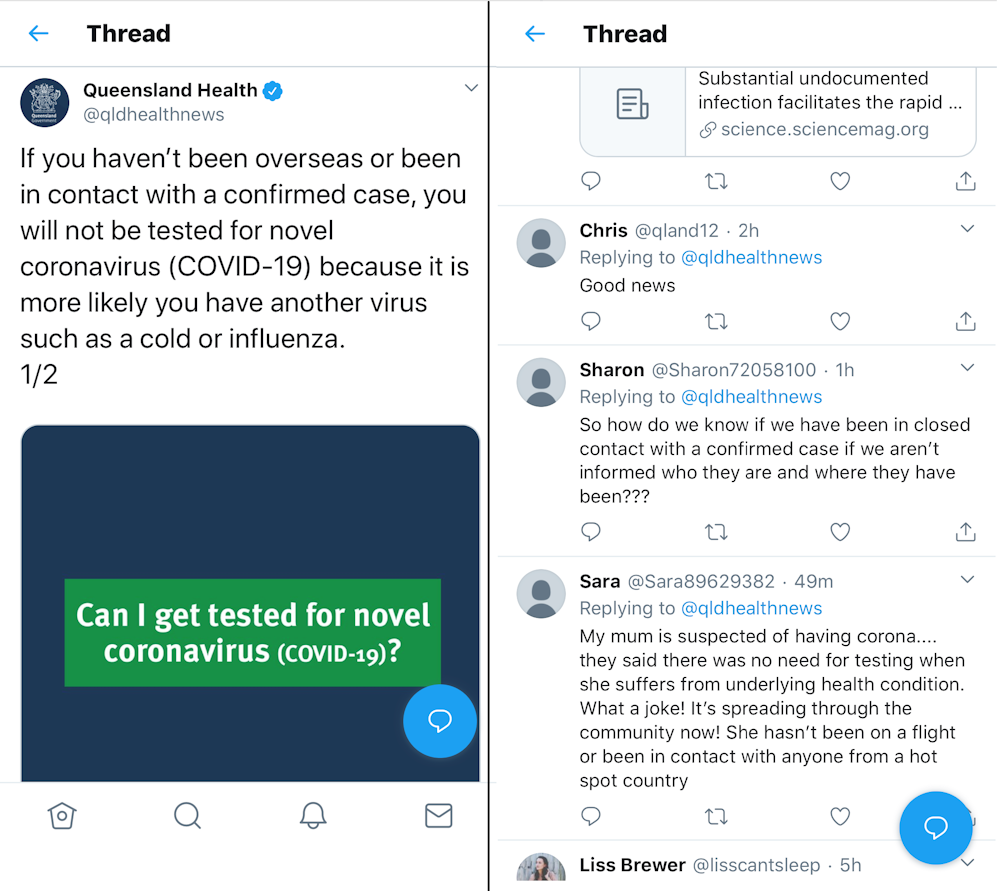

The official tweet from Queensland Health and the bots’ responses.

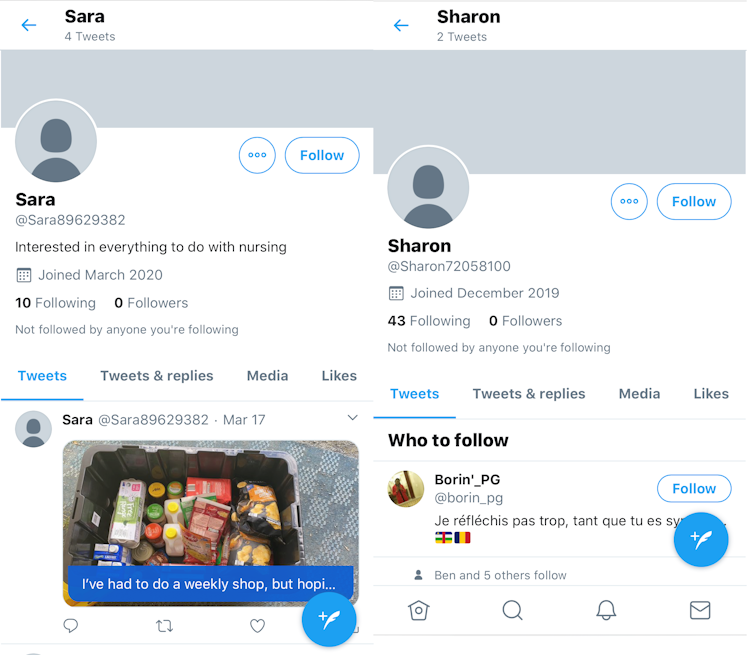

The official tweet from Queensland Health and the bots’ responses. Screenshots of the accounts of ‘Sharon’ and ‘Sara’.

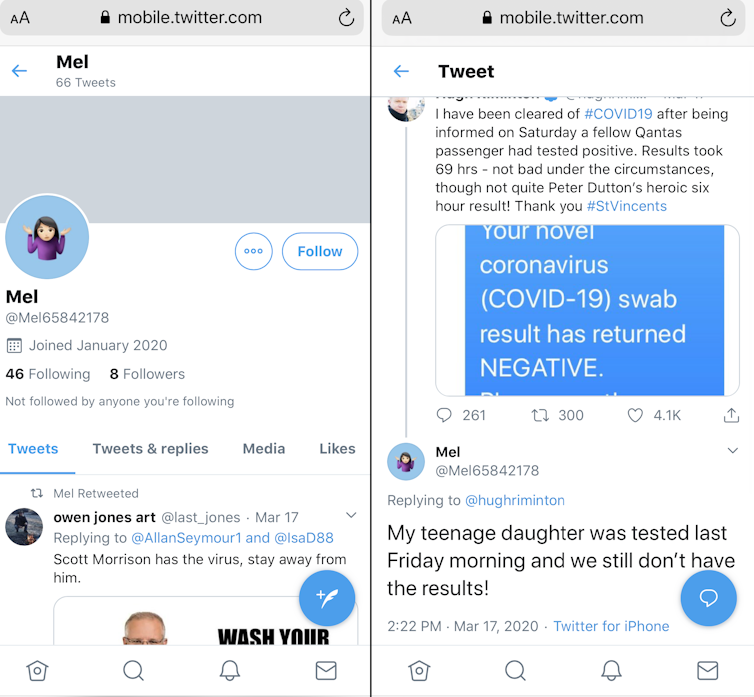

Screenshots of the accounts of ‘Sharon’ and ‘Sara’. Bot ‘Mel’ spread false information about a possible delay in COVID-19 results, and retweeted hateful messages.

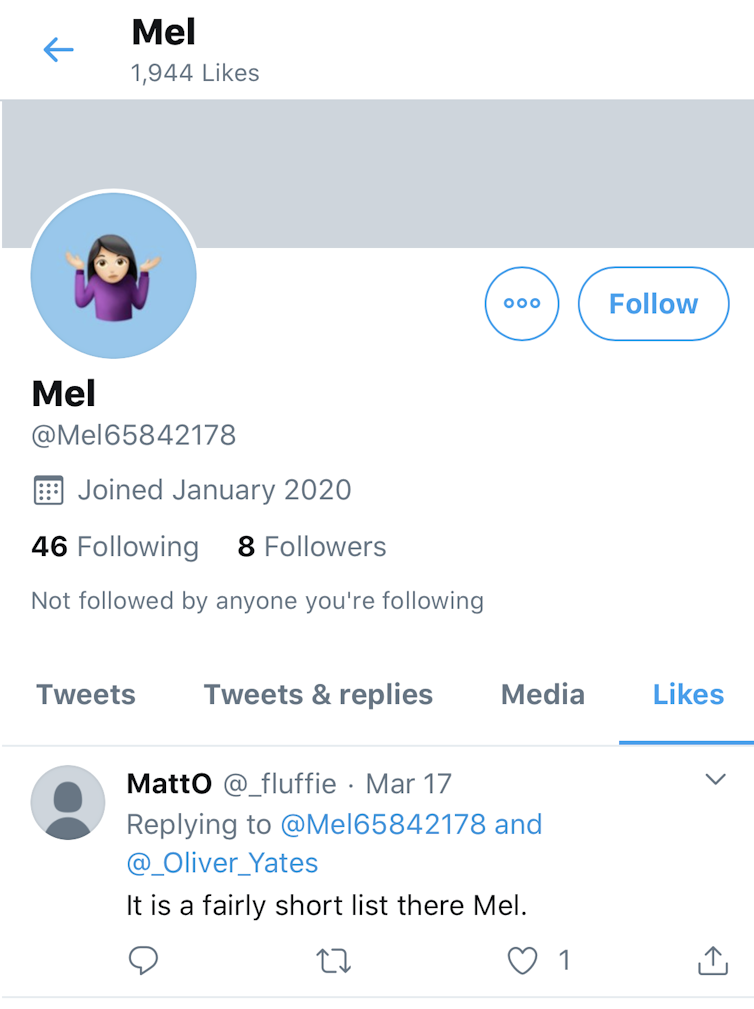

Bot ‘Mel’ spread false information about a possible delay in COVID-19 results, and retweeted hateful messages. An account that seemed to belong to a real Twitter user began engaging with ‘Mel’.

An account that seemed to belong to a real Twitter user began engaging with ‘Mel’.